Thank you both. Here i describe the process that apparently ended in success:

$ zfs get all data/set | egrep "encr|key"

data/set encryption aes-256-gcm -

data/set keylocation prompt local

data/set keyformat passphrase -

data/set encryptionroot data/set -

data/set keystatus available -

$ sudo zpool export data

$ sudo zpool import data

cannot import 'data': no such pool available

and also other commands “zpool import -a” not worked. I had to find partition number using “lsblk” and then this worked:

sudo zpool import -d /dev/sdb1 poolnamehere

though it not asked any decryption passphrase… maybe due to pool not encrypted, but dataset yes?

$ zfs get all|grep crypt

data encryption off default

data/set encryption aes-256-gcm -

data/set encryptionroot data/set -

$ zpool get all data|grep crypt

data feature@encryption active local

first command shows encryption off and second active. When i tried “zpool create -o encryption=on” etc. it talks about invalid pool property:

$ zfs set encryption=on data

cannot set property for 'data': 'encryption' is readonly

Then i have found -O (not -o) parameter which seems to not end in error:

sudo zpool create -O encryption=on data2 /dev/sdb

/dev/sdb1 is part of exported pool ‘data’

I tried to destroy the pool (mounted and unmounted) as i have not found the way to apply encryption on it, but it shows fake message:

$ zpool destroy -f data

cannot open ‘data’: no such pool

But anyway following is the process that worked and is repeatable. At the end of this post i have one more question.

enable zfs support in kernel (it was not enabled in 5.8.16-2-MANJARO after reboot as mentioned)

sudo /sbin/modprobe zfs

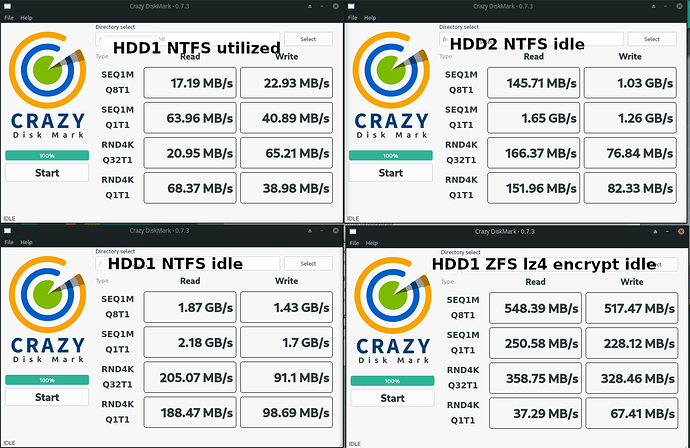

attempt to create pool named “zfsp” with enryption, compression and atime off for improving iops:

sudo zpool create -o feature@encryption=enabled -O encryption=on -O keyformat=passphrase -O compression=on -O atime=off zfsp /dev/sdb

it mounted drive decrypted, though my mountpoint /zfsp had root acces rights, not user ones :-/, so i had to change it:

sudo chown -R user:user /zfsp

I could then copy files and such to my mount point /zfsp

unmounting/encrypting the pool and dataset

sudo zpool export zfsp

(“zfs umount” does not encrypt it, as the dataset can be mounted without passphrase)

loading the pool again (if correct term)

sudo zpool import zfsp

mounting/decrypting the dataset(if correct term?) with -l parameter to enter passphrase (else it complains “encryption key not loaded”)

sudo zfs mount zfsp -l

Then i am unsure if i need to create dataset while the above mentioned “zpool create” command made working enrypted data storage. Anyway i tried it:

zpool status;sudo zfs create -o compression=on -o atime=off -o encryption=on -o keyformat=passphrase zfsp/vd

result: $ sudo zfs list

NAME USED AVAIL REFER MOUNTPOINT

zfsp 406K 14,1T 115K /zfsp

zfsp/vd 99,5K 14,1T 99,5K /zfsp/vd

unsure how this vd is beneficial or if i need it when i was able to write directly to /zfsp anyway

Your feedback/ideas are very welcome. Thank you in advance if you get time to reply.