Hi,

my problem: Writes on my NVME-SSD are very slow.

second problem (I think it is connected with my first): Running btrfs balance regularly locks up my computer for multiple seconds - programs like the KDE UI, Firefox and Thunderbird regularly crashes when I try to interact with them during a rebalance.

I thought that I am an experienced Linux user but I couldn’t find a solution (Btrfs slow read/write speed on nvme did not help me)

My suspicion: Maybe I did something wrong when I extended the BTRFS partition after migration to a 2TB and later to a 4TB SSD.

I’m also using snapshots (Timeshift create a new snapshot each boot) ans quotas (for size information in Timeshift).

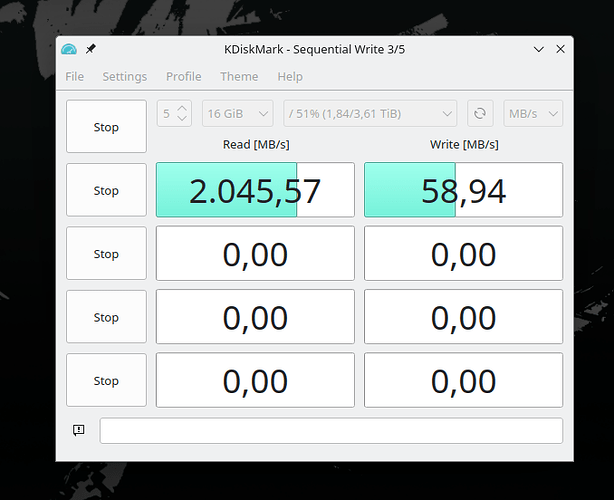

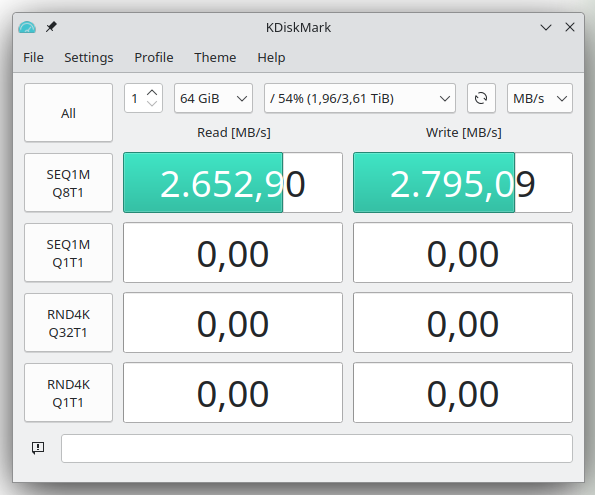

Read/Write performance:

The performance is similar on kernel 6.1, 6.2 and 6.3.

/etc/fstab:

# /etc/fstab: static file system information.

#

# Use 'blkid' to print the universally unique identifier for a device; this may

# be used with UUID= as a more robust way to name devices that works even if

# disks are added and removed. See fstab(5).

#

# <file system> <mount point> <type> <options> <dump> <pass>

UUID=B253-C727 /boot/efi vfat umask=0077 0 2

/dev/mapper/luks-205e4e87-163d-4dae-bd7c-3cda9971fa7f / btrfs subvol=/@,defaults,noatime,discard=async,ssd,clear_cache,nospace_cache 0 0

/dev/mapper/luks-205e4e87-163d-4dae-bd7c-3cda9971fa7f /home btrfs subvol=/@home,defaults,noatime,discard=async,ssd,clear_cache,nospace_cache 0 0

/dev/mapper/luks-205e4e87-163d-4dae-bd7c-3cda9971fa7f /var/cache btrfs subvol=/@cache,defaults,noatime,discard=async,ssd,clear_cache,nospace_cache 0 0

/dev/mapper/luks-205e4e87-163d-4dae-bd7c-3cda9971fa7f /var/log btrfs subvol=/@log,defaults,noatime,discard=async,ssd,clear_cache,nospace_cache 0 0

/dev/mapper/luks-8b452496-c619-4399-b0f5-0e387d52567b swap swap defaults,noatime,sdd 0 0

tmpfs /tmp tmpfs defaults,noatime,mode=1777 0 0

/dev/disk/by-id/wwn-0x50014ee26a3cac5a-part1 /mnt/backup_harddrive_green_1 auto nosuid,nodev,nofail,noatime,noauto,x-gvfs-show 0 0

/dev/disk/by-id/wwn-0x50014ee211edd557-part1 /mnt/backup_harddrive_red_1 auto nosuid,nodev,nofail,noatime,noauto,x-gvfs-show 0 0

/dev/disk/by-id/wwn-0x50014ee2bc98dfb8-part1 /run/media/hacker/BackupBlau/ auto nosuid,nodev,nofail,noatime,noauto,x-gvfs-show 0 0

/dev/disk/by-id/wwn-0x50014ee215b34320-part1 /mnt/backup_violett auto nosuid,nodev,nofail,noauto,noatime,x-gvfs-show 0 0

/dev/disk/by-id/wwn-0x50014ee2c0719f7e-part1 /mnt/backup_tuerkis auto nosuid,nodev,nofail,noauto,noatime,x-gvfs-show 0 0

/dev/disk/by-uuid/a2766337-56fd-44b7-a0d6-df8521308e79 /mnt/linux_games auto nosuid,nodev,nofail,noatime,x-gvfs-show 0 0

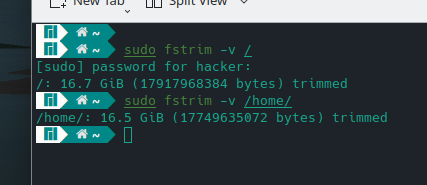

I also enabled fstrim:

sudo systemctl enable --now fstrim.timer && sudo systemctl start fstrim

btrfs fi us / :

WARNING: cannot read detailed chunk info, per-device usage will not be shown, run as root

Overall:

Device size: 3.61TiB

Device allocated: 1.88TiB

Device unallocated: 1.73TiB

Device missing: 0.00B

Device slack: 16.00EiB

Used: 1.84TiB

Free (estimated): 1.76TiB (min: 920.58GiB)

Free (statfs, df): 1.76TiB

Data ratio: 1.00

Metadata ratio: 2.00

Global reserve: 512.00MiB (used: 0.00B)

Multiple profiles: no

Data,single: Size:1.87TiB, Used:1.83TiB (98.12%)

Metadata,DUP: Size:7.00GiB, Used:5.91GiB (84.48%)

System,DUP: Size:32.00MiB, Used:288.00KiB (0.88%)

sudo btrfs device stats / PIPE|INT ✘ 3s

[/dev/mapper/luks-205e4e87-163d-4dae-bd7c-3cda9971fa7f].write_io_errs 0

[/dev/mapper/luks-205e4e87-163d-4dae-bd7c-3cda9971fa7f].read_io_errs 0

[/dev/mapper/luks-205e4e87-163d-4dae-bd7c-3cda9971fa7f].flush_io_errs 0

[/dev/mapper/luks-205e4e87-163d-4dae-bd7c-3cda9971fa7f].corruption_errs 522

[/dev/mapper/luks-205e4e87-163d-4dae-bd7c-3cda9971fa7f].generation_errs 0