On your TrueNAS Core server:

System → Tuneables → Add

Variable: vfs.zfs.arc.meta_min

Value: 4294967296

Type: sysctl

Comment: Allow wider metadata retention in ARC

The changes will apply upon rebooting the server, or you can immediately apply it with the following command on the server:

sysctl vfs.zfs.arc.meta_min=4294967296

The next time your ARC fills up with metadata, it should remain cache’d for significantly longer.

The first time you won’t see any difference. However, keep using your SMB shares, and over time you’ll notice it’s more responsive; less time spent on crawling your server’s drives.

You can even force metadata into the ARC by issuing a find command on the server, such as:

find /mnt/poolname > /dev/null

And,

du -hs /mnt/poolname

Use “sudo” if logged via SSH as a regular user.

(The above two commands are not necessary. In fact, I’d skip them for now. You don’t want to waste this on iocage. Just create the Tuneable and apply the sysctl value without having to reboot first.)

Use cache=loose in the mount parameters:

If you’re the only person accessing the SMB share(s), you can also safely use cache=loose rather than cache=strict in the mount options for better performance. (This makes a huge difference.) You won’t notice the effect until after some usage, as your client will have more data and metadata cache’d in local RAM.

IMPORTANT EDIT:

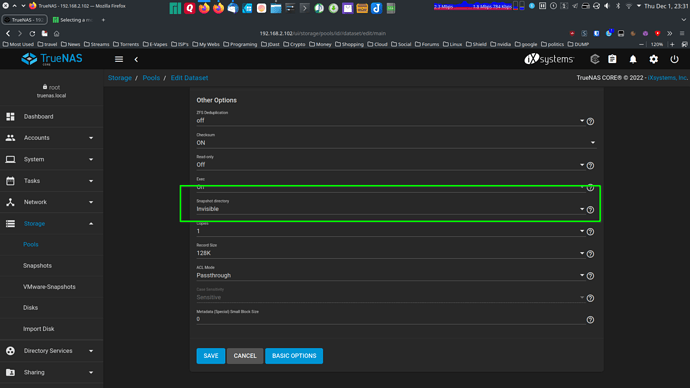

You shouldn’t be sharing the root dataset via SMB. Bad practice, and can bump into permission issues. Plus, you’re forcing the server to waste cache on metadata that is almost pointless to the end-user, such as everything tucked under iocage.

Instead, share a child dataset or specific dataset(s) with files you need to access over SMB. The fact that you have the entirety of iocage exposed via SMB is only making your issue worse. If you really need to access everything under iocage via SMB (for some reason), then create a separate share for it; or just access it via SSH and/or the iocage command on the server.