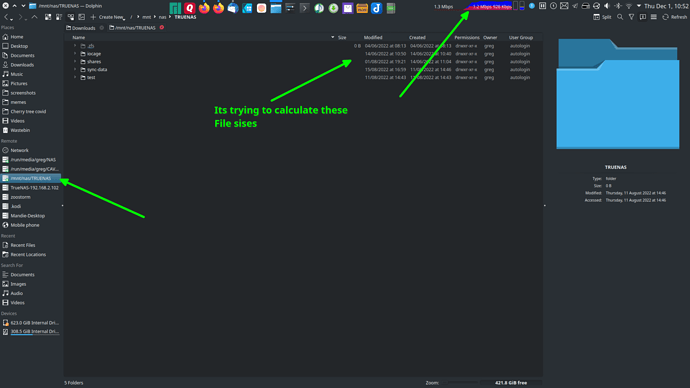

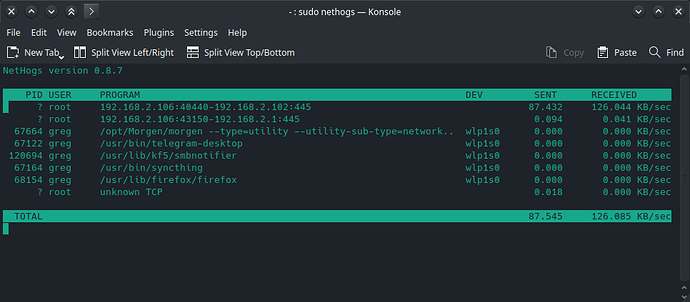

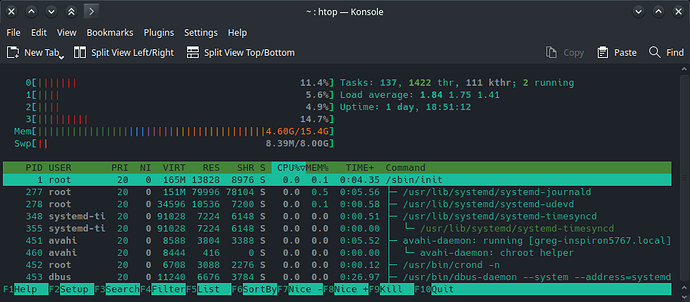

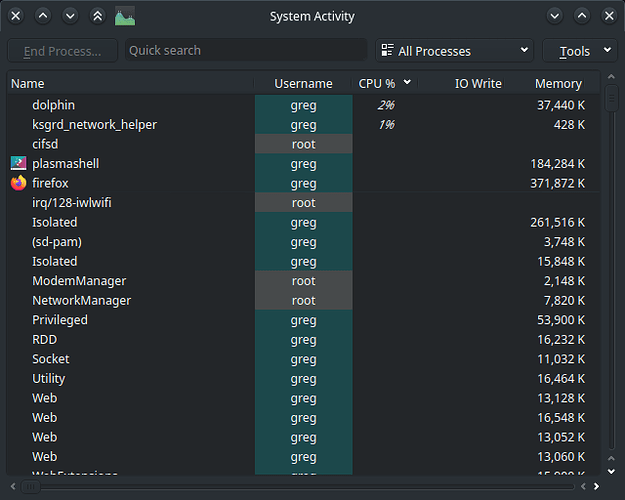

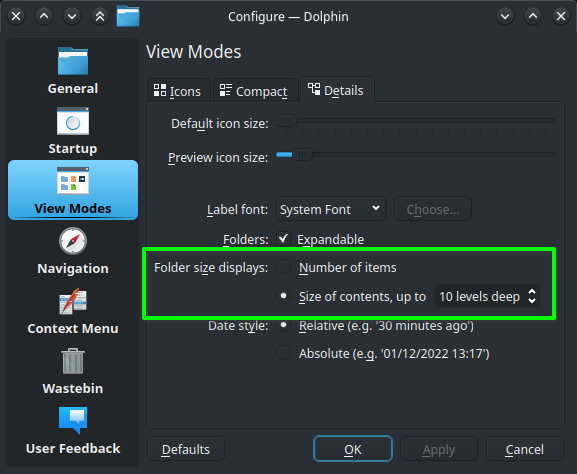

When i select a mounted NAS drive on Dolphin it starts using my network constantly. I suspect its doing some kind of indexing or trying to calculate all the file sizes and how many files there are or something like that but it just does not stop until i close Dolphin. Even if i change back to my home directory or any other dir it still continues to use the network.

If i close Dolphin while on my NAS then the next time i open Dolphin again it will be on my NAS drive and it will start the network activity again even if i switch straight out of the NAS. I end up having to change to my home drive, close Dolphin and open it again just to stop the network activity.

How do i stop it from doing this?

This is its mount:-

❱mount | grep TRUE

//192.168.2.102/main on /mnt/nas/TRUENAS type cifs (rw,nosuid,nodev,noexec,relatime,vers=3.1.1,cache=strict,username=jackdinn,uid=1000,noforceuid,gid=1000,noforcegid,addr=192.168.2.102,file_mode=0755,dir_mode=0755,iocharset=utf8,soft,nounix,mapposix,noperm,rsize=4194304,wsize=4194304,bsize=1048576,echo_interval=60,actimeo=1,closetimeo=5,user,_netdev)