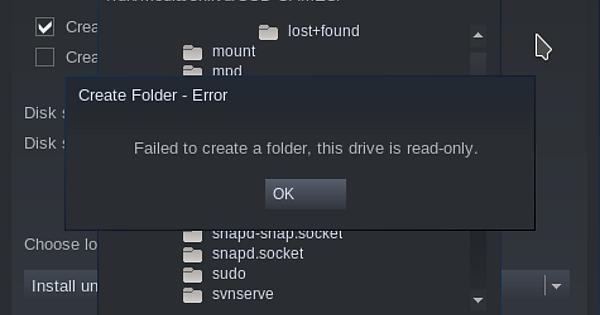

So I recently just switched from windows to Manjaro KDE earlier today and it makes my PC feel alot smoother as I was having quite alot of issues with windows. However I have some issues with the partitions I have made with my otherdrives being read only so I can’t install any games or really do anything. I also have another issue with using the package downloader into the user place but I cant take it out of make a folder in it due to perms. So I would really appriciate it if someone could help me fix both issues.

You probably need to change ownership of the partition.

determine mount point: In terminal type df

then once you determine the mount point:

sudo chown your user name:your user name /run/media/your username/drive mount name

2 Likes

It was an ownership issue that worked once i got past the space in its name issue \ XD

This topic was automatically closed 3 days after the last reply. New replies are no longer allowed.