Btrfs is CoW (Copy on write) filesystem that has more features than Ext4, that is why CoW is regularly slower than Non-CoW

But is it realy that much? I mean 645MB compared to 3.3GB … Realy? Can btrfs under no circumstances read more than 645MB per second even on new hardware?

kdiskmark using fio benchmark isn’t in a real world scenario.

I checked cat for reading a large game data 7z 30 GB in two different partitions (Ext4 and Btrfs) in the same Nvme SSD.

Open bash shell in terminal:

Btrfs

$ time cat Game.7z > /dev/null

real 0m18,430s

user 0m0,045s

sys 0m10,223s

18 sec reading is done

Ext4

$ time cat Game.7z > /dev/null

real 0m28,873s

user 0m0,053s

sys 0m12,955s

28 sec reading is done

However I don’t understand why.

So in your case btrfs is eaven faster than ext4?

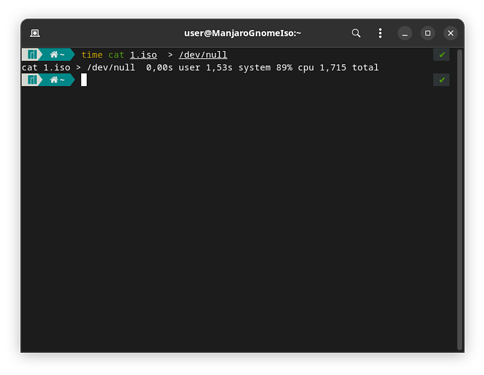

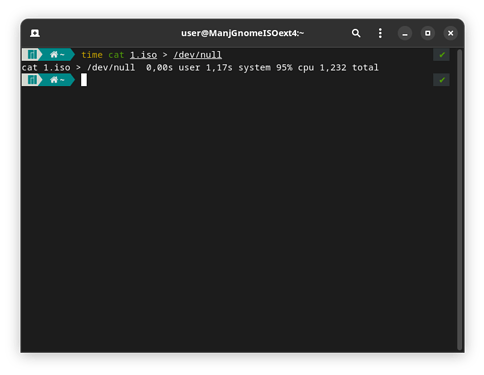

Unfortunately I don’t have a 30GB file, so i tried the Manjaro.iso, witch is only 3.5GB

Btrfs:

ext4:

You can compress many different directories together in one new file.tar

Yes, Btrfs is faster than ext4 on my two different devices when reading the game data 7z.

But I do not notice the difference when using boot time and loading game. (I am using Linux Kernel 5.18)

In my other device:

Btrfs and ext4 in the same nvme SSD without LUKS

Btrfs

$ time cat Game.7z > /dev/null

real 0m10,877s

user 0m0,042s

sys 0m8,518s

Ext4

$ time cat Game.7z > /dev/null

real 0m13,300s

user 0m0,029s

sys 0m7,446s

So easy ![]() Now I feel stupid because I didn’t think of it right away

Now I feel stupid because I didn’t think of it right away

However, i compressed my biggest folder into a 40.7GB .zip file. Zip because for some reason it went faster than tar.

$ tar -czvf 1.zip ~/Downloads

Well it’s not related to the topic, but is there a possibility to use all 16 cores? Nautilus uses only one core for compressing and the command above also.

Anyway, this is btrfs:

$ time cat 1.zip > /dev/null

cat 1.zip > /dev/null 0,04s user 23,44s system 94% cpu 24,794 total

And this is ext4:

$ time cat 1.zip > /dev/null

cat 1.zip > /dev/null 0,05s user 14,95s system 98% cpu 15,190 total

So I’m not shure if I get this right, which place indicates the actual duration?

Thank’s vor helping so far!

Can you show how it is mounted?

mount -t btrfs

mount -t ext4

cat /etc/fstab

Edit: The scheduler can be also a culprit:

grep "" /sys/block/*/queue/scheduler

pamac install pigz

tar --use-compress-program="pigz -k -9" -cf 1.tar.gz ~/Downloads

gzip uses by default only one core, pigz is fully compatible, but uses all possible cores.

Shure, here is the btrfs installation:

$ mount -t btrfs

/dev/nvme0n1p7 on / type btrfs (rw,relatime,ssd,discard=async,space_cache=v2,subvolid=256,subvol=/@)

/dev/nvme0n1p7 on /home type btrfs (rw,relatime,ssd,discard=async,space_cache=v2,subvolid=257,subvol=/@home)

/dev/nvme0n1p7 on /var/cache type btrfs (rw,relatime,ssd,discard=async,space_cache=v2,subvolid=258,subvol=/@cache)

/dev/nvme0n1p7 on /var/log type btrfs (rw,relatime,ssd,discard=async,space_cache=v2,subvolid=259,subvol=/@log)

$ cat /etc/fstab

# /etc/fstab: static file system information.

#

# Use 'blkid' to print the universally unique identifier for a device; this may

# be used with UUID= as a more robust way to name devices that works even if

# disks are added and removed. See fstab(5).

#

# <file system> <mount point> <type> <options> <dump> <pass>

UUID=68BD-1D13 /boot/efi vfat umask=0077 0 2

UUID=af6ff93b-3809-4e38-9acd-9031213e0a5b / btrfs subvol=/@,defaults,discard=async,ssd 0 0

UUID=af6ff93b-3809-4e38-9acd-9031213e0a5b /home btrfs subvol=/@home,defaults,discard=async,ssd 0 0

UUID=af6ff93b-3809-4e38-9acd-9031213e0a5b /var/cache btrfs subvol=/@cache,defaults,discard=async,ssd 0 0

UUID=af6ff93b-3809-4e38-9acd-9031213e0a5b /var/log btrfs subvol=/@log,defaults,discard=async,ssd 0 0

UUID=4371b365-d8bf-430a-ade2-d4fab1a203ac swap swap defaults,noatime 0 0

tmpfs /tmp tmpfs defaults,noatime,mode=1777 0 0

$ grep "" /sys/block/*/queue/scheduler

/sys/block/loop0/queue/scheduler:[none] mq-deadline kyber bfq

/sys/block/loop1/queue/scheduler:[none] mq-deadline kyber bfq

/sys/block/loop2/queue/scheduler:[none] mq-deadline kyber bfq

/sys/block/loop3/queue/scheduler:[none] mq-deadline kyber bfq

/sys/block/loop4/queue/scheduler:[none] mq-deadline kyber bfq

/sys/block/loop5/queue/scheduler:[none] mq-deadline kyber bfq

/sys/block/loop6/queue/scheduler:[none] mq-deadline kyber bfq

/sys/block/loop7/queue/scheduler:[none] mq-deadline kyber bfq

/sys/block/nvme0n1/queue/scheduler:[none] mq-deadline kyber bfq

And this is ext4:

$ mount -t ext4

/dev/nvme0n1p4 on / type ext4 (rw,noatime)

$ cat /etc/fstab

# /etc/fstab: static file system information.

#

# Use 'blkid' to print the universally unique identifier for a device; this may

# be used with UUID= as a more robust way to name devices that works even if

# disks are added and removed. See fstab(5).

#

# <file system> <mount point> <type> <options> <dump> <pass>

UUID=68BD-1D13 /boot/efi vfat umask=0077 0 2

UUID=37921521-2c1a-430c-800b-87d379e6326c / ext4 defaults,noatime 0 1

UUID=4371b365-d8bf-430a-ade2-d4fab1a203ac swap swap defaults,noatime 0 0

tmpfs /tmp tmpfs defaults,noatime,mode=1777 0 0

$ grep "" /sys/block/*/queue/scheduler

/sys/block/loop0/queue/scheduler:[none] mq-deadline kyber bfq

/sys/block/loop1/queue/scheduler:[none] mq-deadline kyber bfq

/sys/block/loop2/queue/scheduler:[none] mq-deadline kyber bfq

/sys/block/loop3/queue/scheduler:[none] mq-deadline kyber bfq

/sys/block/loop4/queue/scheduler:[none] mq-deadline kyber bfq

/sys/block/loop5/queue/scheduler:[none] mq-deadline kyber bfq

/sys/block/loop6/queue/scheduler:[none] mq-deadline kyber bfq

/sys/block/loop7/queue/scheduler:[none] mq-deadline kyber bfq

/sys/block/nvme0n1/queue/scheduler:[none] mq-deadline kyber bfq

Great thank you! Is it possible to make it the default in nautilus? Sometimes a right click on a file is easier for me than comandline

Add to fstab:

noatime,clear_cache,nospace_cache

and reboot.

space_cache=v2 can improve performance, but to be honest it wear out the SSD/NVME faster… At least on HDDs it is recommend in my opinion.

noatime improves latency. On modern systems atime is not needed, especially on desktops.

No idea. Maybe ask upstream: Issues · GNOME / Files · GitLab but in fact this menu is hardcoded.

Just to be shure, you mean like this?

# /etc/fstab: static file system information.

#

# Use 'blkid' to print the universally unique identifier for a device; this may

# be used with UUID= as a more robust way to name devices that works even if

# disks are added and removed. See fstab(5).

#

# <file system> <mount point> <type> <options> <dump> <pass>

UUID=68BD-1D13 /boot/efi vfat umask=0077 0 2

UUID=af6ff93b-3809-4e38-9acd-9031213e0a5b / btrfs subvol=/@,defaults,discard=async,ssd,noatime,clear_cache,nospace_cache 0 0

UUID=af6ff93b-3809-4e38-9acd-9031213e0a5b /home btrfs subvol=/@home,defaults,discard=async,ssd,noatime,clear_cache,nospace_cache 0 0

UUID=af6ff93b-3809-4e38-9acd-9031213e0a5b /var/cache btrfs subvol=/@cache,defaults,discard=async,ssd,noatime,clear_cache,nospace_cache 0 0

UUID=af6ff93b-3809-4e38-9acd-9031213e0a5b /var/log btrfs subvol=/@log,defaults,discard=async,ssd,noatime,clear_cache,nospace_cache 0 0

UUID=4371b365-d8bf-430a-ade2-d4fab1a203ac swap swap defaults,noatime 0 0

tmpfs /tmp tmpfs defaults,noatime,mode=1777 0 0

Everything is correct, BUT disabling fsck for btrfs is a bad idea, anyway it is your system.

0 0 → 0 1

Great thank you!

Now i got this on btrfs:

time cat 1.zip > /dev/null

cat 1.zip > /dev/null 0,03s user 17,28s system 89% cpu 19,325 total

I am not sure if fsck is not for Btrfs in fstab, but it is for Ext4.

In Arch wiki:

If the root file system is btrfs or XFS, the fsck order should be set to

0instead of1

Check cat /usr/bin/fsck.btrfs

# fsck.btrfs is a type of utility that should exist for any filesystem and is

# called during system setup when the corresponding /etc/fstab entries contain

# non-zero value for fs_passno. (See fstab(5) for more.)

#

# Traditional filesystems need to run their respective fsck utility in case the

# filesystem was not unmounted cleanly and the log needs to be replayed before

# mount. This is not needed for BTRFS. You should set fs_passno to 0.

#

# If you wish to check the consistency of a BTRFS filesystem or repair a

# damaged filesystem, see btrfs(8) subcommand 'check'. By default the

# filesystem consistency is checked, the repair mode is enabled via --repair

# option (use with care!).

AUTO=false

while getopts ":aApy" c

do

case $c in

a|A|p|y) AUTO=true;;

esac

done

shift $(($OPTIND - 1))

eval DEV=\${$#}

if [ ! -e $DEV ]; then

echo "$0: $DEV does not exist"

exit 8

fi

if ! $AUTO; then

echo "If you wish to check the consistency of a BTRFS filesystem or"

echo "repair a damaged filesystem, see btrfs(8) subcommand 'check'."

fi

exit 0

Copying benchmark in a real world using cp.

Let check: Copying the Game data 30 GB in the same filesystem

Ext4:

$ time cp Game.7z Game1.7z

real 0m24,201s

user 0m0,001s

sys 0m21,379s

Btrfs:

$ time cp Game.7z Game1.7z

real 0m0,011s

user 0m0,001s

sys 0m0,003s

Btrfs: copying the data from subvolume to another subvolume: (Linux Kernel 5.17+ improved deduplication)

$ time sudo cp Game.7z /opt/Game1.7z

real 0m2,141s

user 0m0,038s

sys 0m0,016s

Btrfs takes no additional space, because of deduplication ability.

Writing benchmark is difficult to test Btrfs due to deduplication.

Copy data from the Nvme SSD to Btrfs filesystem in other Nvme SSD. Both SSDs are similarly fast.

time sudo cp Game.7z /run/media/zesko/Backup/Game1.7z

real 0m19,409s

user 0m0,046s

sys 0m18,632s

btrfs is slower than other filesystems.

- But it depends on the circumstances.

- btrfs has a lot of safety. This comes not without costs.

Enable compression for btrfs, and you may gain some (space and speed) ![]()

When you search for speed, btrfs may be not the best choice !

You can find good Information about Btrfs in the wiki

So i should keep 0 0 on btrfs?

0 0 is default on btrfs — on ext4 it’s 0 1

My test-installation with ext4 has only 70GB root partition, so I had to generate another test file (25.6GB)

(Thanks @megavolt, using pigz and all 16 cores it’s done in seconds)

ext4:

$ time cp Test.tar.gz Test1.tar.gz

cp -i Test.tar.gz Test1.tar.gz 0,00s user 27,48s system 99% cpu 27,760 total

Btrfs:

(unfortunately I have no seccont nvme build in)

$ time cp Test.tar.gz Test1.tar.gz

cp -i Test.tar.gz Test1.tar.gz 0,00s user 0,11s system 91% cpu 0,126 total

Btrfs: copying the data from subvolume to another subvolume:

$ time sudo cp Test.tar.gz /opt/Test1.tar.gz

[sudo] Passwort für user:

sudo cp Test.tar.gz /opt/Test1.tar.gz 0,02s user 25,88s system 92% cpu 28,093 total

I made the changes, but could it gain some speed?

So eaven if the changes to the fstab could gain some speed, i guess it is still slower. But how much slower is it and is this just the normal slowdown? And based on my read/write tests bevore and after, did the changes to the fstab do anything? Like I said, I don’t quite understand the output. Which digit shows the actual speed or duration?

But if this is normal in the end, I guess there’s nothing I can do… I just wanted to be shure

And thanks again for your help so far

Yes

Which Linux kernel do you use?

Did you run sudo btrfs balance start -v /?

My fstab

UUID=bd9ea09d-153e-4e0c--b3e6cbcbab86f0 / btrfs subvol=/@,defaults,noatime,compress=zstd 0 0

Try to change the compress=zstd to compress=zstd:1

No need clear_cache,nospace_cache, space_cache=v2 is already default.

You do not need discard=async in fstab, just use sudo systemctl enable --now fstrim.timer

I use Linux 5.15.55-1 LTS

Nope, but i did now.

$ sudo btrfs balance start -v /

[sudo] Passwort für user:

WARNING:

Full balance without filters requested. This operation is very

intense and takes potentially very long. It is recommended to

use the balance filters to narrow down the scope of balance.

Use 'btrfs balance start --full-balance' option to skip this

warning. The operation will start in 10 seconds.

Use Ctrl-C to stop it.

10 9 8 7 6 5 4 3 2 1

Starting balance without any filters.

Dumping filters: flags 0x7, state 0x0, force is off

DATA (flags 0x0): balancing

METADATA (flags 0x0): balancing

SYSTEM (flags 0x0): balancing

Done, had to relocate 303 out of 303 chunks

It took a while, but after reboot i got this (note I haven’t made any further changes to the fstab since I changed the 1 for fsck back to 0:

Btrfs read:

$ time cat Test.tar.gz > /dev/null

cat Test.tar.gz > /dev/null 0,04s user 12,96s system 65% cpu 19,940 total

Btrfs write, copying the data from subvolume to subvolume:

$ time sudo cp Test.tar.gz /opt/Test1.tar.gz

[sudo] Passwort für user:

sudo cp Test.tar.gz /opt/Test1.tar.gz 0,02s user 23,34s system 90% cpu 25,734 total

So it doesn’t matter if there is clear_cache,nospace_cache & space_cache=v2 in fstab, because it is already the default?

What about discard=async & ssd? it’s there since the installation…

So in your opinion i should enable fstrim.timer and delete from fstab discard=async , ssd , clear_cache & nospace_cache , keep defaults & noatime and add compress=zstd:1 ?